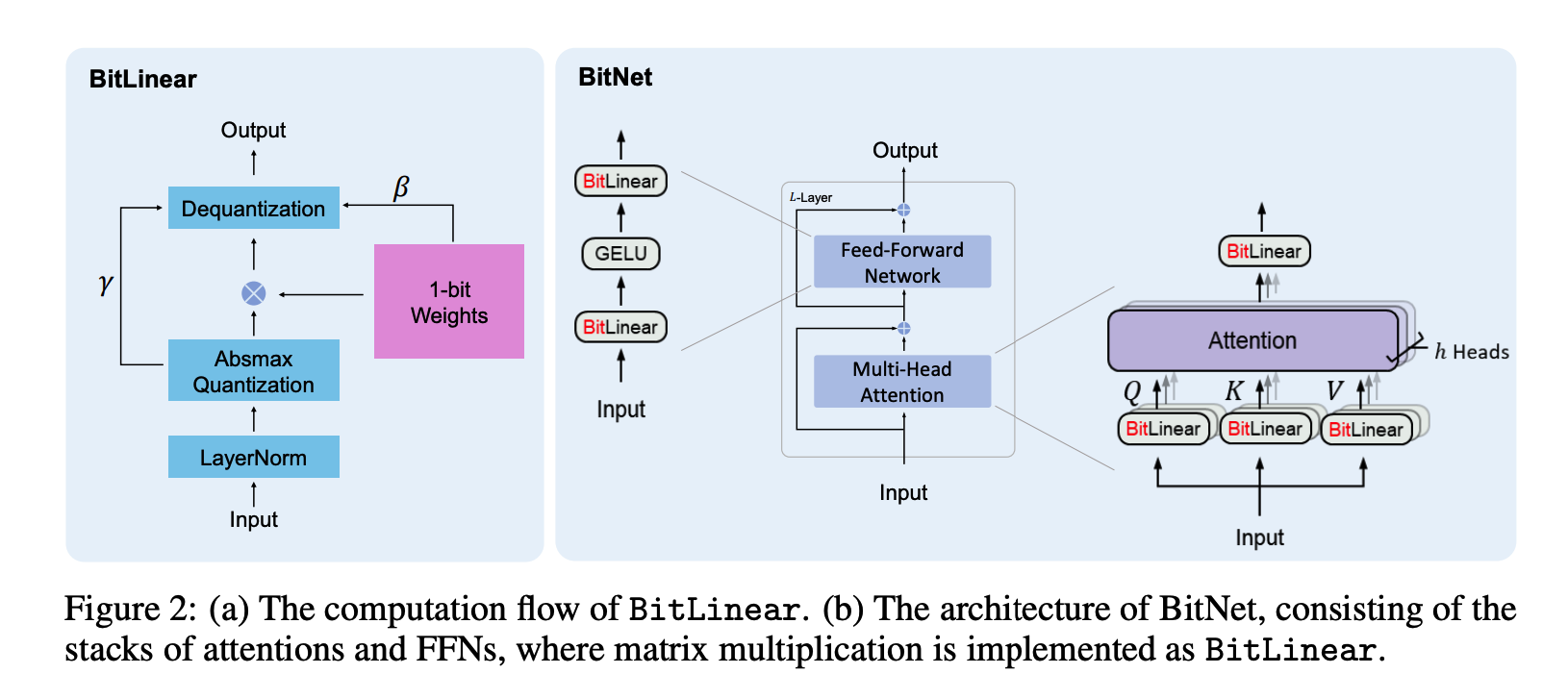

BitLinear

Quantization

Binarization of weights

First step of bit linear is to binarize the weights using Signum Function

A scaling factor of is used after Binarization to reduce the L2 Error between real and binarized weights

Mathematical steps:

This centralizes the weights to 0 mean 2.

Semantic understanding of the math:

- - weight matrix (for linear layer)

- - one specific entry of that matrix

- Sign - Signum Function

- - number of rows

- - number of columns

- - The mean (average) of all the weights in W

Absmax quantization: Scales activations into the range 4.

Semantic Understanding of the math:

the clip function forces (clips) the value into the interval

What are and the range

- Target range:

Example:

- If b = 8, then

- Range is roughly

This is the integer range they want the activations to lice in after scaling + clipping

The makes the bounds slightly inside that range to avoid edge cases like overflow later cast or round to an integer type

This means that gamma is equal to the maximum absolute value among all the entries of x

Eg. x = [-10, 5, 3, 2, 6] The gamma value would be 10