Attention(Q, K, V) = softmax()V

Design

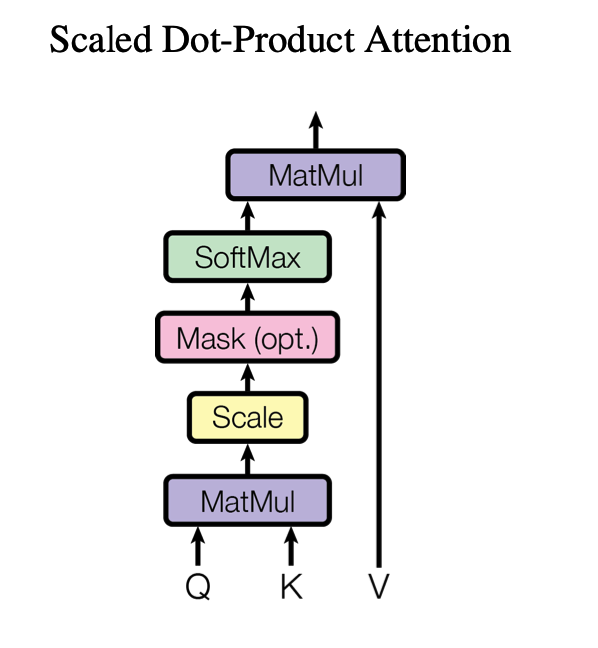

Scaled Dot-Product Attention architecture

Key:

-

MatMul: Matrix Multiplication

-

Scale: Raw scores being divided by

-

Mask (Optional): Applied to prevent attending to certain positions

-

Softmax: Turns the scaled scores into a probability distribution emphasizing the most relevant tokens

-

Second MatMul (Softmax Output x V): Multiply softmax results with value matrix (V), a weighted sum of values based on how much attention each token deserves

-

Q (Query) - The vector (or set of vectors) which represents the input information (like question?)

-

K (Key) - A key is associated with part of the input and acts as an identifier for that piece of information. When you have a query you compare all of them against the keys to determine how relevant each input is to the query

-

V (Value) - Contains the actual information or content that’ll be aggregated. After attention computes a relevant score (usually through a dot product of query with each key) these scores are used to weigh the corresponding value vectors to produce the final output